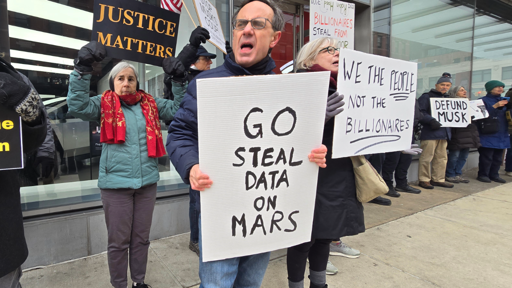

“Go steal data on Mars” is a rockin’ sign. 👍

Hopefully our AI overlord will be benevolent

AI is going to be used to hurt so many people. Tech bros steal and hurt as many people as possible for their profits.

“Go steal data on Mars” went straight into my soul archive!

It doesn’t have to be.

That’s why pushing locally run, sanely implemented LLMs is so important, for the same reason you’d promote Lemmy instead of just telling people not to use Reddit.

This is my biggest divergence with Lemmy’s political average, probably: AI haters are going to bring this to reality, as they are just pushing out “dangerous” local LLMs in favor of crappy corporate UIs.

It’s a multi-faceted problem.

Opaqueness and lack of interop are one thing. (Although, I’d say the Lemmy/Reddit comparison is a bit off-base, since those center around user-to-user communication, so prohibition of interop is a bigger deal there.) Data dignity or copyright protection is another thing.

And also there’s the fact that anything can (and will) be called AI these days.

For me, the biggest problem with generative AI is that its most powerful use case is what I’d call “signal-jamming”.

That is: Creating an impression that there is a meaningful message being conveyed in a piece of content, when there actually is none.

It’s kinda what it does by default. So the fact that it produces meaningless content so easily, and even accidentally, creates a big problem.

In the labor market, I think the problem is less that automated processes replace your job outright and more that if every interaction is mediated by AI, it dilutes your power to exert control over how business is conducted.

As a consumer, having AI as the first line of defense in consumer support dilutes how much you can hold a seller responsible for their services.

In the political world, astro-turfing has never been easier.

I’m not sure how much fighting back with your own AI actually helps here.

If we end up just having AIs talk to other AIs as the default for all communication, we’ve pretty much forsaken the key evolutionary feature of our species.

It’s kind of like solving nuclear proliferation by perpetually launching nukes from every country to every country at all times forever.

Maybe if AI talks to AI for long enough, they get smart 🤔

It’s more that we genuinely don’t see the net benefit of LLMs in general. Most of us are not programmers who need something to help “efficiency” up our speed of making code. I am perfectly capable of doing research, cataloging sources, and producing my own writing.

I can see marginal benefit for those who struggle with writing, but the problem therein is that they still need to run whatever their LLM spits out past another human to make sure it’s actually accurate or well written. In the end, with all of it you still need human editors and at that point, why have the LLM at all?

I’d love to hear what problem you think LLMs actually solve.

Most of us are not programmers who need something to help “efficiency” up our speed of making code.

This presupposes that programmers need that shit either, which also isn’t true.

Not the parent, but LLMs dont solve anything, they allow more work with less effort expended in some spaces. Just as horse drawn plough didnt solve any problem that couldnt be solved by people tilling the earth by hand.

As an example my partner is an academic, the first step on working on a project is often doing a literature search of existing publications. This can be a long process and even more so if you are moving outside of your typical field into something adjacent (you have to learn what excatly you are looking for). I tried setting up a local hosted LLM powered research tool that you can ask it a question and it goes away, searches arxiv for relevant papers, refines its search query based on the abstracts it got back and iterates. At the end you get summaries of what it thinks is the current SotA for the asked question along with a list of links to papers that it thinks are relevant.

Its not perfect as you’d expect but it turns a minute typing out a well thought question into hours worth of head start into getting into the research surrounding your question (and does it all without sending any data to OpenAI et al). That getting you over the initial hump of not knowing exactly where to start is where I see a lot of the value of LLMs.

Its not perfect as you’d expect but it turns a minute typing out a well thought question into hours worth of head start into getting into the research surrounding your question (and does it all without sending any data to OpenAI et al). That getting you over the initial hump of not knowing exactly where to start is where I see a lot of the value of LLMs.

I’ll concede that this seems useful in saving time to find your starting point.

However.

-

Is speed as a goal itself a worthwhile thing, or something that capitalist processes push us endlessly toward? Why do we need to be faster?

-

In prioritizing speed over a slow, tedious personal research, aren’t we allowing ourselves to be put in a position where we might overlook truly relevant research simply because it doesn’t “fit” the “well thought out question?” I’ve often found research that isn’t entirely in the wheelhouse of what I’m looking at, but is actually deeply relevant to it. By using the method you proposed, there’s a good chance that I never surface that research because I had a glorified keyword search find “relevancy” instead of me fumbling around in the dark and finding a “Eureka!” moment of clarity with something initially seemingly unrelated.

-

Fuck being more productive! Ai is capitalism maximizing productivity and minimizing labour costs.

Ai isn’t targeting tedious labour, the people building them are going after art, music and the creative process. They want to take the human out of the equation of pumping out more content to monetize.

You probably don’t need an LLM to assist you, but the average human is terribly incompetent. I work in higher education and even people with PhDs often fail to type up a coherent email.

I work in higher education and even people with PhDs often fail to type up a coherent email.

If someone got a PhD without being able to write a coherent sentence, that says more about how we’re handing out PhDs to unqualified people than it does that we need LLMs to solve that.

Ai is not going to help people be smarter or be more competent: https://www.microsoft.com/en-us/research/uploads/prod/2025/01/lee_2025_ai_critical_thinking_survey.pdf

I’m convinced that it won’t help incompetent people magically become competent. I’m also convinced that some people will use it to speed up their work.

Yea true, just look how China is using AI in their government to track, trace and spy on all their citizens.

Big Brother will be an AI

AI takes a LOT of $$ to work, so the companies behind it need to make money somehow.

And that $$ is in more data from users/new content.