But denuvo

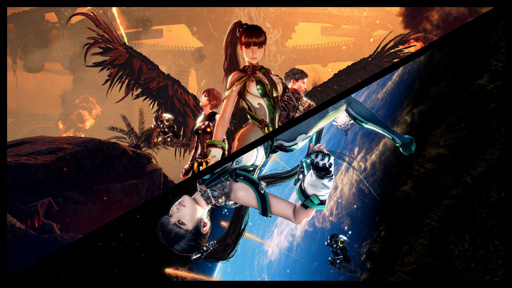

Stellar Blade performs well despite using Denuvo. Imagine how much better it will run when Denuvo is removed.

Or it will run exactly the same because it has a minimal performance impact when implemented correctly, which seems to be the case here.

It’s UE4 game not a UE5. That helps.

No, don’t follow denuvo

Stop blaming developers. It’s management that doesn’t want to give the developers the time and money to optimize their game. Devs know how to optimize their game, they aren’t stupid. They can’t do shit if they don’t get the time to work on it.

Also

So, ports like Stellar Blade on PC are great for fans to see, and I’m hoping game developers and publishers can look at this as a template going forward.

Yeah that’s not how it works, you can’t look at other games and see how to optimize your game. Every game engine and game works differently under the hood.

That’s probably mostly true but optimisation is becoming a lost art form that some devs just don’t know.

Daily reminder that Chris Sawyer coded Rollercoaster Tycoon by himself in x86 assembly. In a cave, with a box of scraps.

No, it doesn’t, lol. I tried to run the demo on the lowest settings, it used all my vram. I can run Red Dead Redemption with my 4GB vram gpu, but I can’t run Stellar Blade?

RDR came out in 2010…?

And ran on the xbox 360… which had 512 MB.

You’re below minimum requirements on VRAM and you say it’s not optimized. Well done.

If you want realistic jiggle physics you just pay the silicon price.