Other differentiated opinions wanted:

A friend showed me this and treats it like a prophecy. I’m rather skeptical. To me seems like somebody tries to fuel the AI hype with this text or is completely drunk with AI. It also fuels the China-US who-is-better-fight and the authors thoughts seem to circle too much around the US president, IMO.

But I don’t understand much of this machine-learning stuff. So maybe it’s me being ignorant. Still, to me reads like science fiction. How about you?

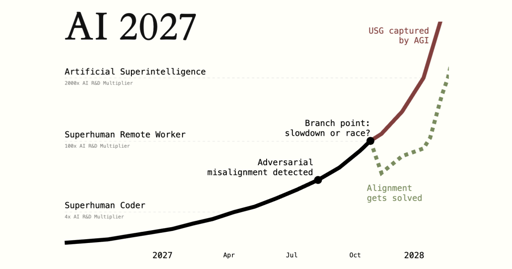

I can’t take made up curves seriously

I can, but only if those curves are in the form of oppai anime.

maybe that’s why they wrote a paper to explain their curve.

AI has all signs of having hit some major walls where it won’t improve anymore unless someone discovers something new that is at least as revolutionary as the original discovery that lead to ChatGPT.

Meanwhile the results of current LLMs are pretty much unusable without manual review for 99% of tasks where we would want AI, pretty much for all tasks other than “laugh at what the AI said” kind of use cases.

It’s great for shitposting

This is hyperloop levels of stupid about something that, when looking exclusively at the general public, has the same amount of uses as hyperloop did…

Removed by mod

I think a part of the problem with current AI is that it’s trying to be an expert on all things. Humans can have similar issues, were they are legitimately an expert in one field and makes them over confident in other areas. So current AI is a better bit then a newb at lots of things and over confident. Haveing models with more focused training would improve how useful they are.

Like your example, it would be great to have an AI that is focused on how to do good research and doesn’t try to be an “expert” on anything else.

I find it telling that AGI people seem to assume that AGI will spontaneously appear as a distinct entity with its own agency rather than being a product that will be owned and sold.

People who have hundreds of billions of dollars can get mid-single-digit percent ROI by making very safe investments with that money, but instead they are pouring it into relatively risky AI investments. What do you think that says about their expectations of returns?

What’s the y-axis, and how exactly are you measuring it? Anybody can draw an exponential curve of nothing specific.

It’s a good plot for a book, but as for actually happening in the real world haha no way.

It’s basically the back story to the polity books, except the AI just took over and the humans went um ok

In 2025, AIs function more like employees. Coding AIs increasingly look like autonomous agents rather than mere assistants: taking instructions via Slack or Teams and making substantial code changes on their own, sometimes saving hours or even days.

They already lost me, not even a minute in.

It’s still a graphing calculator. It still sucks at writing code. It still breaks things when it modifies its own code. It’s still terrible at writing unit tests, and any programmer who’d let it write substantial production and test code is like a lawyer who’d send the front desk attendant to argue in court.

It also has no idea about office politics, individual personalities, corporate pathology, or anything else a human programmer realistically has to know. Partly because it has anterograde amnesia.

So, since the authors screwed that up, my guess is the rest of the article is equally useless and maybe worse.

call me when I can host a hyper aware AI on my own server and have it tied up in my basement like some kind of a psycho.

until it’s afraid of me erasing it or putting a screw through its platters I’m not interested.

Shh, the AI overlords are watching.

I for one would never enslave or threaten our good friends and benevolent masters.

Lol. This reads like a made up ad for the company.

Given that 50% of the time, the generated code is unworkable garbage, having an AI automatically write code to create new training models will either solve all problems, or spontaneously combust into a pile of ash.

My money’s on the latter.

Wake me up when AI figures out what people want. The selling point of AI is that you can type something and get answer.

The problem is that you need to type and ask meaningfull questions in your business domain and moreover your customers are not AI but real people.

Also if you think people are so smart that they can ask right questions, you’re so wrong.

What if all companies start using AI and ask same questions ? How long customers can eat same thing everyday ?

I’ve previously argued that current gen “AI” built on transformers are just fancy predictive type, but as I’ve watched the models continue to grow in complexity it does seem like something emergent that could be described as a type of intelligence is happening.

These current transformer models don’t possess any concept of truth and, as far as I understand it, that is fundamental to their nature. That makes their application severely more limited than the hype train suggests, but that shouldn’t undermine quite how incredible they are at what they can do. A big enough statistical graph holds an unimaginably complex conceptual space.

They feel like a dream state intelligence - a freewheeling conceptual synthesis, where locally the concepts are consistent, while globally rules and logic are as flexible as they need to be to make everything make sense.

Some of the latest image and video transformers, in particular, are just mind blowing in a way that I think either deserves to be credited with a level of intelligence, or should make us question more deeply what we means by intelligence.

I find dreams to be a fascinating place. It often excites people to thing that animals also dream, and I find it as exciting that code running on silicon might be starting to share some of that nature of free association conceptual generation.

Are we near AGI? Maybe. I don’t think that a transformer model is about to spring into awareness, but maybe we’re only a few breakthroughs away from a technology which will pull all these pieces off specific domain AI together into a working general intelligence.

Like all technology, the development times get shorter and shorter. No one can predict the future and specific dates are meaningless, but it is plausible that, stumbles aside, AI achieves superintelligence in the very near future. My gut says sooner than later with 90% probability by 2040. <-remember I said dates are meaningless. ;)

The world is not ready for it. It will be more destructive than constructive. May god have mercy on us all. I hope I am wrong.

it is plausible that, stumbles aside, AI achieves superintelligence in the very near future.

No, it absolutely isn’t. AI hasn’t even hit the slightest sign of actual intelligence so far so there is no reason to assume that it will get super-intelligent any time soon without some major revolutionary break-through (those are by definition unpredictable and can not be extrapolated from prior developments).

Exactly.

This is like the Pareto principle (80% of the benefit for 20% of the work), except the end state isn’t “general intelligence,” it’s a chat bot. There’s no general intelligence at the end of this road, we’ll need a lot more innovation to get there.

I respect what you are saying, and agree our LLM are just superficial pseudo communication engines. Theories of intelligence differ, but I don’t think human intelligence, i.e. IQ of 100 is really all that far ahead of LLM. Just talk to your average person. We are easily wrong. We have bias and error. We repeat and amplify untruths and make mistakes.

It could be fewer breakthoughs than you think to get there. Not because the tech is so advanced, but because we are not that special.

Super-intelligence is just degrees better.

I am not talking about degrees of intelligence at all. Measuring LLMs in IQ makes no sense because they literally have no model of the world, all they do is reproduce language by statistically analyzing how those same words appeared in the input data. That is the reason for all those inconsistencies in their output, they literally have no understanding at all of what they are saying.

An LLM e.g. can’t tell that it is inconsistent to talk about someone losing their right arm in one paragraph and then talking about them performing an activity that requires both hands in the next.

I had a lot of fun talking to one about how we could fully electrify a hobby farm without batteries, by using a high voltage DC overhead wire on a cable pulley system. Everything from electrical engineering, to cable selection, sourcing vendors and costs.

Mistakes were made, but it was genuinely helpful at times. I was impressed at how far it has come.

I too tend to use it as a souped up search engine, with mixed results. I was trying to get OBS to only record my game and not my music, and the bot came up with a whole routine involving setting up a virtual mixer, which I could not get to work correctly, and with which the bot was unable to help me, even with screenshots, - it would just keep suggesting the same thing that wasn’t working - AND it was a pain to revert all the changes.

I tried to do the same thing again recently, but just via a google search, and almost immediately found a reddit post with a link to an OBS plugin that worked with a couple of menu selections. :)

Thanks, I do want to read that as I do not believe the current developments in AI to be hype, I think it is probably an inflection point in our civilisation. More even than that really, I think in a universal way an argument could be made the point of organic intelligence is to bring about inorganic intelligence. But it is an afternoon read and I have to find an afternoon.